Ian J. Thompson

How the Non-Physical Influences

Physics and Physiology: a proposal

The causal closure of the physical world is assumed everywhere in physics but has little empirical support within living organisms. For the spiritual to have effects in nature, and make a difference there, the laws of physical nature would have to be modified or extended. I propose that the renormalized parameters of quantum field theory (masses and charges) are available to be varied locally in order to achieve ends in nature. This is not adding extra forces to nature but rescaling the forces which already exist. We separate metric time in 4 dimensions from process time as the order of actualization of potentialities. This is to allow iterative forward and reverse steps in metric time to influence intermediate variations in the vacuum permittivities to move charged bodies towards achieve specific targets at a later time. Then mental or spiritual influx could have effects in nature, and these should be measurable in biophysics experiments. With this proposal, we see after some centuries how ‘final causes’ could once again be seen active in nature.

1 Mind-body problem and possible solutions

The mind-body problem has been a long-standing issue in standard science and philosophy, since many wonder how two kinds of things, so different, could causally affect each other. Many of you, however, have long assumed that such interactions are both possible and frequent. Everyone should know from their daily lives that minds can produce physical effects. But how do we cross the mind-body gap? Scientists should ask if there is something incomplete about physics, and whether any new predictions should be made that could be tested experimentally.

Various ways have been proposed to cross this gap within a theoretical understanding. The simplest is to take the physical and non-physical (mental, etc) worlds as really a unified system of causes and effects, so what is mental is really physical, and vice versa. This approach is popular in many quarters, but we all should know that mental and physical things appear to have many distinct kinds of capabilities. Identifying their substances runs the risk of losing what is distinctively interesting and useful about the non-physical. A second way is with the dualism that Descartes made popular, whereby there exist distinct physical and mental substances that are inter-connected in specific ways to be determined. This means the two worlds are both not causally closed, because of their interactions. I base my proposal here on an extension of dualism, whereby we should expect many different levels or planes of realities. This scheme I derive from Emanuel Swedenborg with his principles[1] and descriptions[2] of multiple discrete degrees.

2 The Non-Physical

In order to propose interactions, we need a clear theory of the non-physical as well as the physical, and we require especially a positive as well as negative characterization. Many of us recognize multiple discrete degrees or planes of existence, in ordered lists such as spiritual, purposes, mental, intentions, sensorimotor minds, spiritual bodies, final causes, and physical objects. In this article I focus on the last few degrees from the outermost mental. That is, I discuss how final causes could create targets which affect what happens in physics. This gives us an example of how causal processes could bridge the gap between adjacent planes, and this method could be later applied to the transition between the other kinds of discrete degrees. In this way we can see how dualism could possibly work.

If there are going to be non-physical effects on physical things, then something will have to be different in physics because of those effects. Effects on physiological systems would imply a range of effects in general cellular biochemistry. We could also have non-physical causes such as mental desires and intentions affecting the physiology of neurons in the brain, so we would have a causal path for how mental causes can lead to physical effects such as bodily movements. Questions of how that could be possible have been debated endlessly since Descartes’ dualism. In the proposal to be made here, we will be trying to keep the existing structure of physical theory working as much as possible, so that computers, microscopes and solar systems still all work and most of science will be unchanged. Rather than change everything and upset the groundwork of science, we will be looking for a ‘soft spot’ in physical theory whereby the laws of behavior of some things are not fixed. Rather, the laws in limited parts of physics will vary as part of bigger picture, where that big picture includes non-physical processes such as minds.

According to physics, the bodies of living organisms are made of atoms in the forms of molecules, where atoms are composed of protons, neutrons and electrons. Some of the molecules are very large proteins in cells. Many of these have to fold into specific shapes after they are assembled in order to perform their functions as enzymes and catalysts. The speed and directionality of this folding has been a puzzle in biophysics, even since Levinthal framed his Paradox[3]. Many resolutions of this paradox have been proposed – a few publications each year – but there is still no general solution. Some cells require the assistance of chaperone cage proteins that trap unfolded proteins and somehow cause them to fold, but how that is done reliably inside the cage is still not clear. I predict that non-physical influences in living cells could provide targets for protein folding arrangements, and so speed up the folding.

The laws that govern atoms and molecules in physics, it turns out, have their own structure of discrete degrees that can be recognized. Going in order from effects to causes, we can see three discrete degrees:

1. Classical Newtonian Physics describes most large objects, with defined positions, masses m, interaction forces F, and then accelerations found from Newton’s second law F = ma.

2. Quantum Mechanics (QM) is more accurate for small atoms and particles within atoms. Now there is a wave function that gives probabilities for selection of those definite positions. The wave function is governed by Schroedinger equation for specified masses and interaction potentials.

3. Quantum Field Theory (QFT) describes with a Lagrangian the fields (defined by bare masses) and their interactions (defined by coupling constants). The Theory produces the masses and interaction potentials to be used in Quantum Mechanics.

The causal order here is QFT ➔ QM ➔ CNP. But what determines the input masses and coupling constants in that starting Lagrangian? Perhaps there is another discrete degree above that of QFT.

3 Finding a Soft Spot in quantum physics

We now come to the issue of connecting the non-physical with the physical. We are going to follow the pattern of discrete degrees, and take the non-physical degrees as all above the physical discrete degrees. Then we try to find the connection between the lowest mental degree and the highest physical degree. The outermost mental degree is the sensorimotor mind, and the inmost physical degree is the Lagrangian of quantum field theory. Between them, therefore, is where a connection should be expected, maybe by some new discrete degree between them. I therefore propose: the non-physical influences the physical by generating changes in the values of the masses and charges in the field Lagrangian.

Are these Lagrangian parameters not fixed in standard physics? Most physicists think so, and if you look up “Parameters of the Standard Model” you can see fixed values listed[4]. But there is something here that is often overlooked. These Standard Model parameters are not the input values in the QFT Lagrangian, but different from them. They are more the output values that are reached by adjusting the input values through a process in QFT called renormalization. The complicated renormalization process is needed because, to start with, if finite input values are used the output values are all, remarkably, infinite. Conversely, to get finite output values, then the input values must be zero! This paradox caused a consternation when discovered 80 years ago, until physicists worked out some clever kinds of renormalization methods to have both finite input and output values.

There are several methods for performing renormalization in

modern field theory[5],

and because they all give similar results physicists have become more or less

happy with this situation. I give schematic account of how one of these methods

– dimensional regularization – works. Let ![]() be

the several input parameters for bare charges

be

the several input parameters for bare charges ![]() and

masses

and

masses ![]() of

the relevant particles. And let

of

the relevant particles. And let ![]() be

the output predictions when calculations have made for a suitably large number

of multiple-order interactions of the various particles. These will be the dressed

charges

be

the output predictions when calculations have made for a suitably large number

of multiple-order interactions of the various particles. These will be the dressed

charges ![]() and

masses

and

masses ![]() of the particles, along with structural properties

of the particles, along with structural properties ![]() and

reaction rates

and

reaction rates ![]() that could be measured.

that could be measured.

To calculate all these dressed interactions, we have to

integrate over the spacetime or spacetime-momentum-energy of all possible

intermediate field processes, where spacetime has dimensionality ![]() We

can extrapolate the results for

We

can extrapolate the results for ![]() to other values of

to other values of ![]() near

4, but not exactly 4, and then the

near

4, but not exactly 4, and then the ![]() vary

as some function (

vary

as some function (![]() .

Physicists then discovered that

.

Physicists then discovered that ![]() could be found near their physical values provided the input

could be found near their physical values provided the input ![]() were allowed to vary for each

were allowed to vary for each ![]() in some manner designed to give the experimentally observed charges

and masses

in some manner designed to give the experimentally observed charges

and masses ![]() . They did not in the end

need to evaluate at the limit

. They did not in the end

need to evaluate at the limit ![]() as long as they got sensible results for

as long as they got sensible results for ![]() when

when ![]() was approaching 4. That

is, quantum field theorists end up calculating expressions like (

was approaching 4. That

is, quantum field theorists end up calculating expressions like (![]() in the limit as

in the limit as ![]() , for some functions

, for some functions ![]() and

and ![]() . They thus avoid the impossible calculation at exactly

. They thus avoid the impossible calculation at exactly ![]() The

actual values of

The

actual values of ![]() are

judged as temporary mathematical possibilities, and hence of no actual physical

significance. When

are

judged as temporary mathematical possibilities, and hence of no actual physical

significance. When ![]() and

and

![]() were

constrained to the measured charges and masses, the fact that the

were

constrained to the measured charges and masses, the fact that the ![]() limits

resulting for

limits

resulting for ![]() and

and

![]() agreed extremely well with experiment

gave great confidence that the theory was physically correct. This is the

renormalization method.

agreed extremely well with experiment

gave great confidence that the theory was physically correct. This is the

renormalization method.

Given a renormalization procedure as defined this way, nevertheless,

it turns out easy to get different output predictionsfor ![]() at different places in spacetime

at different places in spacetime![]() . We simply repeat the normalization method at each location[i] while using, ins.

We simply repeat the normalization method at each location[6]

while using, instead of the known constants

. We simply repeat the normalization method at each location[i] while using, ins.

We simply repeat the normalization method at each location[6]

while using, instead of the known constants ![]() and

and

![]() the locally observed varying values

the locally observed varying values ![]() and

and ![]() . Using those observed values, we thus derive varying structural

and reaction properties

. Using those observed values, we thus derive varying structural

and reaction properties ![]() the different places. Since the inputs to the renormalization

method have always supposed to have been the observed

the different places. Since the inputs to the renormalization

method have always supposed to have been the observed ![]() and

and

![]() values, if those are experimentally observed

to vary with location, then there can be no objection (apart from prejudice) to

use as inputs the observed varying values. Some mechanisms are

presumably necessary to generate specific constant or varying values, but such

mechanisms are not given within today’s standard field theory.[7]

values, if those are experimentally observed

to vary with location, then there can be no objection (apart from prejudice) to

use as inputs the observed varying values. Some mechanisms are

presumably necessary to generate specific constant or varying values, but such

mechanisms are not given within today’s standard field theory.[7]

If that is the case with quantum field theory, then maybe

varying coupling constants have already been seen? John Webb in Sydney in

papers[8],[9]

appearing in 2001 and 2011 claims exactly that. His team found from

astronomical spectroscopy some evidence that the energy levels in atoms showed

slightly weaker electric forces in distant stars, by about 1 part in ![]() .

He observed changes in the wavelengths of spectral lines, which must have been

caused by different energy levels in the distant atoms compared with the same

atoms on earth. So, we see, variations in some electric coupling strengths are

conceivable, and may indeed have been seen already.

.

He observed changes in the wavelengths of spectral lines, which must have been

caused by different energy levels in the distant atoms compared with the same

atoms on earth. So, we see, variations in some electric coupling strengths are

conceivable, and may indeed have been seen already.

We are now going to investigate the fine-tuning of mass and charge parameters not just varying slowly between stars but varying locally and within the much smaller scale of individual molecules. We will focus, as Webb did, on electric forces. Now we must conceive of not only charge changes varying over the age of the universe, but also varying in micro-seconds in living organisms to achieve their needed ends in nature.

We considered above the possibility of a new discrete degree between quantum field theory and the sensorimotor mind. I now propose a particular form of that degree as involving ‘targets’ that are derived from final causes from the mind, and which operate a control system to achieve those targets by adjusting the electric parameters of physical fields just in the needed parts of spacetime. More proposed details will be given below. This will be a new discrete degree inside quantum field theory, and it may in the future also help to solve problems of naturalness and fine tuning in regular field theory. For now, we suggest that adjusting electric parameters is specific to living organisms, and that it occurs at all scales of psychology and biology: every day and every second of our lives.

I now remind the reader of the standard mathematical law for

electrostatic attraction and repulsion. This is Coulomb’s inverse-square law

for the electric force ![]() on

charge q1 at position r1 and q2

at r2 is:

on

charge q1 at position r1 and q2

at r2 is:

![]()

This force ![]() is

proportional to the product of the charges

is

proportional to the product of the charges ![]() ,

and it depends inversely on the square of the

distance

,

and it depends inversely on the square of the

distance ![]() between

the two particles. There is an overall scale factor of 1/

between

the two particles. There is an overall scale factor of 1/![]() depending on what is called the ‘electric permittivity’

depending on what is called the ‘electric permittivity’ ![]() .

It is clear here that varying the magnitude of one of the charges, q1

say, will vary force

.

It is clear here that varying the magnitude of one of the charges, q1

say, will vary force ![]() .

That is what the non-physical input is proposed to do. We are not creating a

new force but adjusting an existing one. There is no specific ‘mental force’,

but if mental input could vary any of the charges q1, then it

could vary the forces on electrons and ions – on any kind of charged particle.

.

That is what the non-physical input is proposed to do. We are not creating a

new force but adjusting an existing one. There is no specific ‘mental force’,

but if mental input could vary any of the charges q1, then it

could vary the forces on electrons and ions – on any kind of charged particle.

4 Varying Electric Permittivity of the vacuum

Jacob Beckenstein[10],[11]

showed how to obtain very similar effects, while keeping charges ![]() constant, by varying permittivities

constant, by varying permittivities ![]() .

The reason is that we can thereby keep more of standard physics the same, and

only vary standard physics at a specific ‘soft spot’. That is because Maxwell’s

field equations for electromagnetic processes are definite in implying the

conservation of charge, but his equations allow permittivities

.

The reason is that we can thereby keep more of standard physics the same, and

only vary standard physics at a specific ‘soft spot’. That is because Maxwell’s

field equations for electromagnetic processes are definite in implying the

conservation of charge, but his equations allow permittivities ![]() to

be varied considerably in, say, materials that are dielectrics, water, or

capacitors. The four Maxwell equations are

to

be varied considerably in, say, materials that are dielectrics, water, or

capacitors. The four Maxwell equations are

![]()

![]()

![]()

![]()

where the electric field

is ![]() and the magnetic field

is

and the magnetic field

is ![]() . The electric charge

density

. The electric charge

density ![]() , and current

, and current ![]() is

is ![]() The two equations on

the left imply conservation of charge. The

The two equations on

the left imply conservation of charge. The ![]() is the ‘magnetic permeability’ such that the speed of light

is the ‘magnetic permeability’ such that the speed of light ![]() .

Beckenstein showed that if permittivities

.

Beckenstein showed that if permittivities ![]() were to be varied, then the permeabilities

were to be varied, then the permeabilities ![]() could be always inversely varied so the speed of light was kept

constant and Einstein would be kept happy. Einstein’s theories of relativity

were built on the constancy of the speed of light.

could be always inversely varied so the speed of light was kept

constant and Einstein would be kept happy. Einstein’s theories of relativity

were built on the constancy of the speed of light.

Most electric charges in living organisms move

slowly, at least much less than the speed of light, so biomagnetic effects are

smaller than bioelectric effects. That means we can largely ignore the magnetic

fields ![]() , and stick to

electrostatics with just

, and stick to

electrostatics with just ![]() , which is much simpler. The theory now has the charge

density

, which is much simpler. The theory now has the charge

density ![]() as source of electric

field

as source of electric

field ![]() :

:

![]() .

.

From this equation we can calculate the electric fields

however much the permittivity ![]() may vary. The new form of Coulomb’s

law depends on the permittivity

may vary. The new form of Coulomb’s

law depends on the permittivity ![]() at charge

at charge ![]() , and on

, and on ![]() at

at ![]() , as

, as

![]()

If ![]() ,

then this equation reverts to standard Coulomb’s law above. This new equation

still allows us to calculate, for example, how protein molecules move. Remember

that we are now varying the permittivities of the vacuum, not just varying

them in solid dielectric compounds or in water.

,

then this equation reverts to standard Coulomb’s law above. This new equation

still allows us to calculate, for example, how protein molecules move. Remember

that we are now varying the permittivities of the vacuum, not just varying

them in solid dielectric compounds or in water.

5 Energy is not always conserved locally

There is one clear consequence of this new kind of electric

equation: energy and momentum are not always conserved. Noether’s

theorem (Emmy Noether, 2015) states that, if physics laws depend on time, then

energy is not necessarily locally conserved. If the laws have terms that

vary with time, then energy is not conserved, and if the laws have terms that

vary with position, then momentum is not conserved. Here the permittivity is ![]() varying in space

varying in space ![]() and time

and time ![]() so neither

energy nor momentum are conserved. Thus energy is not conserved in our case.

We can see immediately from the expression for the electrostatic energy

so neither

energy nor momentum are conserved. Thus energy is not conserved in our case.

We can see immediately from the expression for the electrostatic energy

![]()

that if the permittivities in the denominators vary with

time even when the charges remain fixed, then the energy ![]() is

going to increase or decrease.

is

going to increase or decrease.

Previous scientists have taken the conservation of energy as fixed beyond doubt, and they have tried to find ways of allowing non-physical inputs while not ‘violating’ the conservation of energy. For example, John Eccles has talked of external inputs biassing the probabilities of events in quantum mechanics. Henry Stapp wants minds to vary the time of probabilistic quantum events. Others have thought of moving energy a short distance from one location to nearby, but that does not conserve energy fully. Amit Goswami has thought of using non-local entanglement for communication but keeping to the laws of quantum mechanics does not allow any signals or information to be conveyed by non-local entanglements. Remember that chances in quantum mechanics are very small, and entanglement is very difficult in warm bodies. The electric permittivity changes being proposed here will have much larger effects.

For those who still worry about failing to have energy conservation, we have to ask what has been experimentally observed. We are talking about deliberate variations inside living cells, and we have not yet experimentally observed energy conservation to be conserved in all locations inside such an organism. Any general arguments based on physical closure and concluding in energy conservation are clearly based on a starting point – the absence of any non-physical causes – that is precisely the question under debate, and it can never be a starting assumption. We will need experimental measurements of permittivities and energies before any scientific conclusion can be reached (for or against).

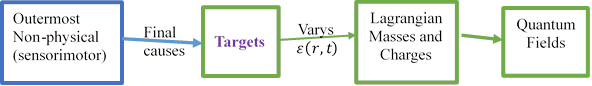

6 ‘Targets’ as a new level of physics for physiology

The remaining part of this paper presents a mechanism for

how an organism could very the permittivities ![]() in order to achieve useful ends or

targets within its body. Having ends or targets amounts to final causes

operating in the body, and those final causes can be seen as the kind of input

needed from the non-physical for the purpose of achieving new order in the

physical body. So I propose a new discrete degree of ‘targets’ between the

non-physical and the inmost degree of the body:

in order to achieve useful ends or

targets within its body. Having ends or targets amounts to final causes

operating in the body, and those final causes can be seen as the kind of input

needed from the non-physical for the purpose of achieving new order in the

physical body. So I propose a new discrete degree of ‘targets’ between the

non-physical and the inmost degree of the body:

|

So, in order from the left, we have first the outermost non-physical such as the sensorimotor mind, secondly the new degree of targets, which are set by the non-physical to be the required physical shape not yet achieved, and then the field Lagrangian which has the masses and charges and is the inner-most physical degree known so far.

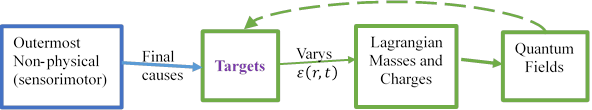

We can gain some understanding of how targets might operate by looking at how organisms develop while growing and how they regrow to their normal form after injury or transplants. Michael Levin[12] summaries his observations by conclusions that cells need a feedback system to

1) know the shape S the cell is supposed to achieve (its target),

2) tell if its current shape S0 differs from this target,

3) compute by means-ends analysis how to get from S0 to S,

4) perform a kind of self-surveillance to know when the desired shape has been reached.

That is, we have to add to the above diagram an additional function for targets, which is to calculate a difference or ‘error’ between the current state of the organism and its target state. This is the green dashed line:

This gives us three main functions for Targets:

- Receive a final-cause from non-physical degree – giving the target configuration

- Determine differences between current state and target configuration

- Vary electric permittivities

to minimize difference, and so

achieve the target.

to minimize difference, and so

achieve the target.

I propose that this is done in a physical degree, so using the non-physical degree not for all the molecular steps. The ‘target’ degree may have to anticipate futures if needed, but not using time travel, and this may result in some kind of physical feedback loop. We note that ‘predictive processing’ models in psychology and cognition[13] find it very useful to include kinds of error or difference inputs, and they may have similar functional features. In both fields there are still some details to work out.

7 Metric time and process time

Such a system would be much more effective if it could anticipate configurations in the near future, so it could hit a moving target while not always playing catch-up to the location seen most recently. Think of trying to dock a polypeptide with an enzyme, when both are always on the move from thermal motion. On a larger scale, imagine a flying bird trying to intercept an airborne seed. It would be more efficient if a living organism could adjust permittivities over the whole finite time interval from now until the time of the targeted interception. Such a seeming time-travel can be done even in physics if we separate the dynamics in ‘metric time’ from ‘process time’, within the overall view of time called the ‘growing block universe’.

In the Growing Block Universe view of time[14], the past is 100% definite but the future is not. Thus we can have the future informing us of the results of the present causes, even before the present causes are completely settled and decided. The ‘block’ under discussion is what exists in metric time, which can be combined with space to form the four-dimensional spacetime popular in presentations of Einstein’s special theory of relativity. The ‘growing’ under discussion is the progressive addition by coming to be of new past events, which are what is actual and definitely happened. In the half of the metric spacetime that is the future, we can envisage the extrapolated forms of the present causes. Those are ‘previews’ of the future and can exist even before the present causes are completely settled and actualized. The general passage of process time is that of potentialities in the future region making actual and definite actualized-events in the past region. Henri Bergson advocated such a lived or process time, and A.N. Whitehead made process the center of his Process and Reality[15]. Many thinkers have seen something like process time necessary in order to understand the changes of state in quantum physics when taken as the successive probabilistic actualizations of propensities or quantum selections[16].

In our theory of targets, we are going to use both kinds of time. We extrapolate in metric time some present causes into effects in the near future of spacetime, as the metric future exists now, already. Because metric time is not directional, we can also use it to obtain the differences between the current-extrapolated future and some specified target arrangement. We use process time to allow a targeting system to adjust causes before they are fully actualized.

We are going to require measuring future differences and

allow making permittivity changes in a range of times. We will postulate first

that a targeting system can use physical waves forward-propagated in metric

time to establish a difference between that extrapolation and the target at the

target-time. Secondly that adjoint waves can propagate backwards in metric time[17],

as do for example, the time-reversed solutions of Maxwell equations (for

electromagnetic waves), the time-reversed fields in quantum mechanics (used all

time in field theory), and of Newton equations (for particles in molecular

dynamics) from the target-time back to the present. Those adjoint waves can be

integrated to give the sensitivities needed to vary the permittivities to

reduce the extrapolation![]() target difference. If these forward and

backward waves could be repeated at intermediate process times, then suitable

permittivity adjustments should be found such that the physical system reaches

the target in reality[18].

target difference. If these forward and

backward waves could be repeated at intermediate process times, then suitable

permittivity adjustments should be found such that the physical system reaches

the target in reality[18].

8 Numerical models of changing proteins

In order to test where such a backwards-and-forwards scheme could work in practice, I made a toy numerical simulation for a protein molecule in a chaperone cage as often used in cells to fold molecules that do not spontaneously arrange themselves correctly. The protein molecule had 100 amino-acid units of either positive or negative charges, and the cage had 16 charges around its inside surface in two rings of 8 each. Using forward and adjoint waves, a gradient-descent optimizer could at each iteration calculate which combination of permittivity changes should begin to minimize the discrepancy between the extrapolated forward wave and some specified target configuration.[19] It was found possible to move molecules around in the cage, rotate them by some given angle, and re-arrange segments of the 100-unit chain. Sometimes, however, the target discrepancy gets stuck in a local minimum not close to the overall target, so more theory and numerical testing will be needed to establish the full range within which this method is a reliable way of physically leading a molecule to given target configurations.

9 Testing Predictions

Predictions of methods of reaching targets should be tested experimentally. Some general predictions are that physical processes proceed more quickly to useful result in vivo, within a living cell, compared with in vitro (with a glass test tube)[20]. And that this should happen particularly with charged particles/atoms (not with neutrons, for example). Some specific predictions are that electric effects in living organisms fluctuate because of targeting mechanisms in operation. The vacuum permittivity should change in atoms and in cells, though we must distinguish these from solvent permittivity changes, especially in water.

Note that this model only predicts changes of the physics parameters needed to get useful results, which is probably not extending very much to the surrounding regions. Permittivity changes are measurable by measuring energy level changes in atoms within the causal chain to reach the target. Atoms will have brief shifts in energies, so fluorescent light should briefly see changes in wavelengths, changes which can be measured easily and accurately by modern spectrometers. The wavelength shifts of absorption and fluorescent emission lines were how Webb’s group measured electromagnetic shifts in atom in distant stars.

10 Implications

Should these effects on permittivity be measured, we would begin to have an understanding of how non-physical things could have effects in nature. The mechanism proposed here would show how final causes and targets could be active in biology. Science has tried to remove them for the last 500 years, but now we see some primitive kinds of teleology active even within physics. We have a way to bring the future into line, without time travel disrupting the past. The scope of investigation here widens the field of possible scientific explanations concerning mental and other non-physical causes, and no longer need the physical universe be causally closed.

Acknowledgments

I wish to thank Ron Horvath, Andy Heilman, Steve Smith, Forrest Dristy and Gard Perry for useful discussions and encouragement.

Ian J. Thompson: Livermore, CA 94550, USA

Current address: Lawrence Livermore National Laboratory L-414, Livermore, CA 94550, USA

Website: http://www.ianthompson.org Email: ijt@ianthompson.org

Pdf version: DR3-1-I.Thompson.pdf

Expanded

from talk on July 24 at SSE-PA Connections 2021: A combined meeting of the

Society for Scientific Exploration and the Parapsychological Association.

Prerecorded video at https://www.youtube.com/watch?v=IA5ssfmzn90

Powerpoint at https://www.newdualism.org/review/vol3/Showing-in-physics-v7.pptx

Philpapers: https://philpapers.org/rec/THOHTN-3